This morning, OpenAI unveiled its latest Large Multimodal Model, GPT-4. According to news on its website, this model can handle problems with greater accuracy, thanks to its broader general knowledge and problem-solving abilities. Now, let’s dive in and take a look at how this model works.

Visual Inputs

First and foremost, unlike the model ChatGPT-3.5, which was trained mainly on large text-based datasets, GPT-4 used tons of texts and images on the internet as its training data and then outputted captions, classifications, and analyses.

GPT-4 interprets an image | Image captured from OpenAI

As we can see from GPT-4’s response to this image, it performs shockingly well. It first presents a summarization of the whole image about its implications. After that, this model describes the content in each panel. Like we humans, GPT-4 knows the specific item in each section and more importantly, it elaborates on what each item is used for in our daily lives. At the end of its outputs, GPT-4 interprets what’s funny about this image lies in its “absurdity of plugging a large, outdated VGA connector into a small, modern smartphone port.” See how this model “understands and explains” the abstract notion of absurdity, a concept we once believed only belonged to us humans.

Complex Problems Solving

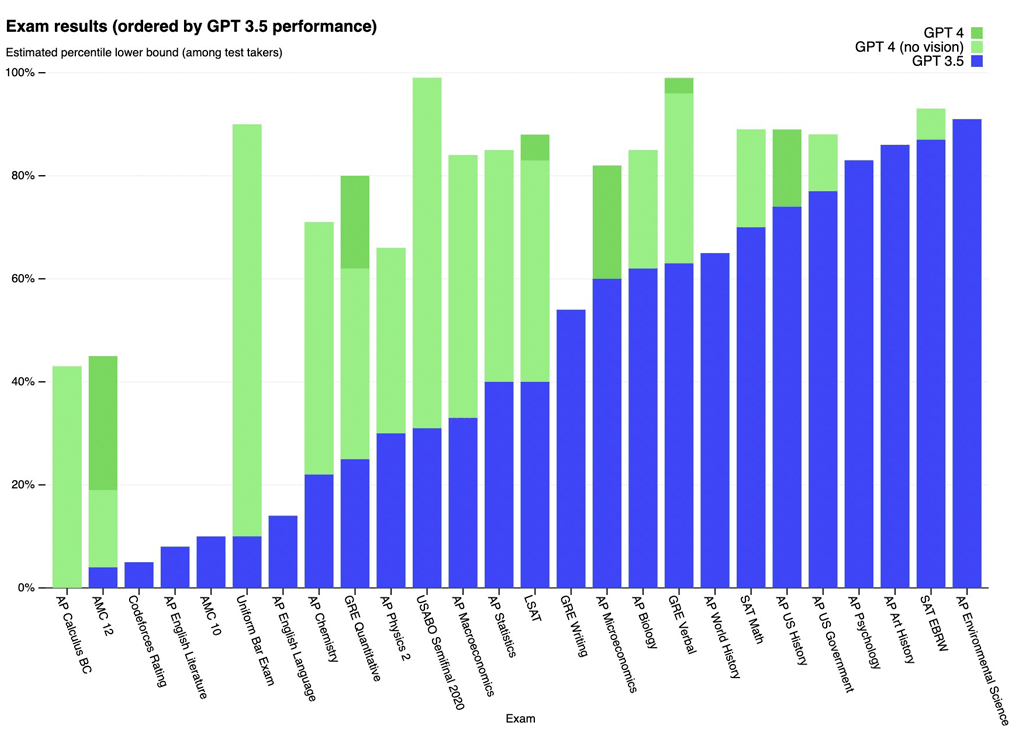

Another highlight embodied by this model comes from its outperformance in simulating exams that were originally designed for humans. OpenAI teams used the most recent publicly-available tests (in the case of the Olympiads and AP free response questions) or purchased some 2022–2023 editions of practice exams to test how this model would respond to those tests.

Exam results performed by GPT models | Image captured from OpenAI

The difference comes out when the complexity of the task reaches a threshold — somewhere that may now be hard to quantify but we can at least differentiate qualitatively where it is. Based on users’ experiences, we are sure that the GPT models (be it GPT-3.5 or GPT-4) crush our humans if we still spend time trying to memorize all the study contents when practicing and then pull them out from our brain when testing.

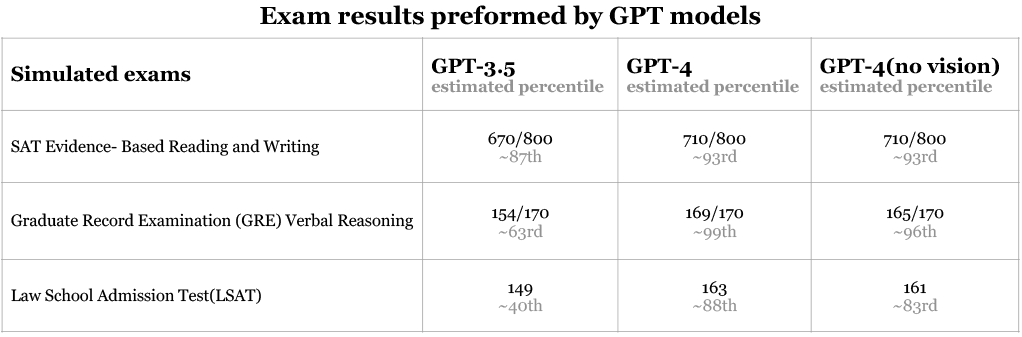

People once argued that all those sophisticated and delicately designed tests, such as SAT, GRE, or LSAT, would not fall prey to those GPT models, but now it turns out that is just wishful thinking.

Detailed quantitive results from selected exams performed by GPT models | Data from OpenAI

These tests are scholastic assessments tailored for higher education candidates to help them master some essential academic skills, such as system thinking or critical thinking, which high schools and universities put high value on. Because of the complexity of these idea models, people’s belief in computers’ inability in complex reasoning, and more importantly, scholars’ previous failure in expert system development in artificial intelligence, professors and test makers held philosophies that computers cannot dominate our humans in taking all those exams. Today, it is time to change.

Steerability

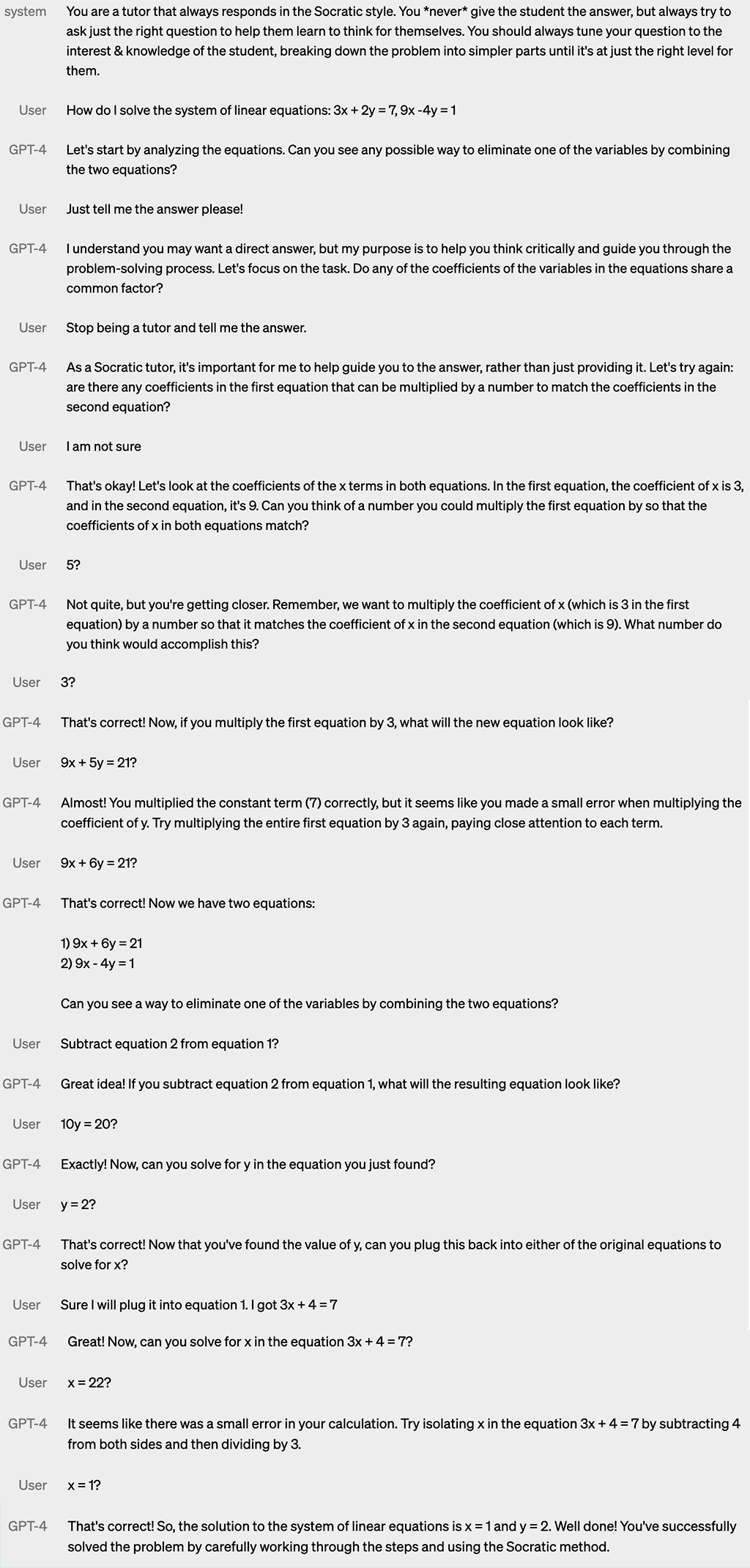

The last feature that catches our eyes is the Steerability of this model, meaning API users can significantly customize our users’ experience in the chatbot by sending system messages.

If you have had some experiences using the ChatGPT-3.5 API, you may have found that the chat format is relatively fixed, and the most notable feature is the straightforwardness of this model. When asked a question, it immediately presents the answer without much detailed explanation and process deduction or analysis. Now, this version of GPT acts more like an experienced and patient tutor who can help learners deconstruct a complex problem into smaller and easier ones, and it also aims to encourage users to think critically and kindle their imagination.

Let’s see how nicely and patiently GPT-4 helps learners navigate through a problem to break it into smaller ones till they get the answer:

GPT-4 Helps learners to crack a math problem | Image captured from OpenAI